How to Unzip files from S3 Bucket using AWS Lambda

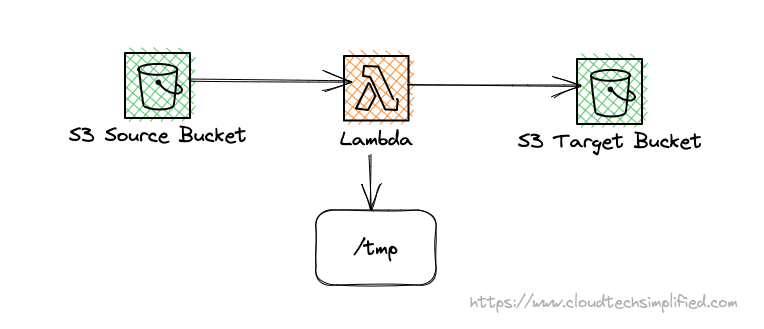

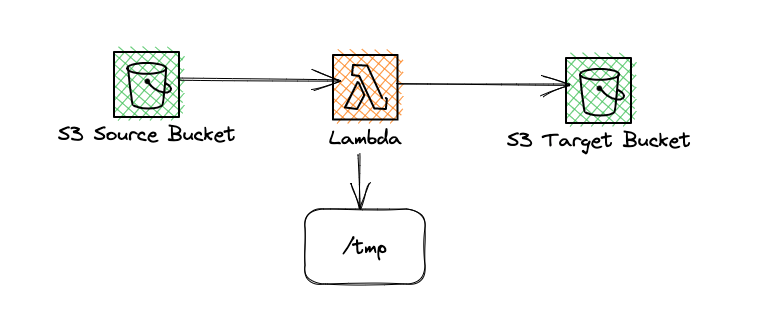

This article will discuss how to unzip files automatically to the target bucket when you upload a zip file in the source bucket.

decompress npm package to unzip the files and write them to /tmp directory and then copy all the files to the target bucketI've written a similar article on how to untar file here

Before discussing how to unzip the files using AWS Lambda- let us create a zip file from a directory. When you normally zip a file using the zip utility on mac, it'll create another directory __MACOSX which would contain metadata. For this tutorial, we would not need that as we just want to zip only the files in source directory and not any metadata.

You can use the below command to zip only the source files without any metadata directory.

zip -r topDirectory.zip topDirectory -x '**/.*' -x '**/__MACOSX'Please note that the directory topDirectory contains the text files across multiple sub-directories.

Infrastructure

We're going to use AWS CDK for creating the necessary infrastructure. It's an open-source software development framework that lets you define cloud infrastructure. AWS CDK supports many languages including TypeScript, Python, C#, Java, and others. You can learn more about AWS CDK from a beginner's guide here. We're going to use TypeScript in this article.

At high level, we just need 3 resources

- Source S3 bucket

- Lambda function to unzip the files

- Target S3 bucket

Creation of buckets

I'm using the below code to create a source bucket( ZipBucket ) and target bucket ( UnzipBucket ) - random numbers are appended to the bucket names just to make the bucket names unique.

const zipBucket = new s3.Bucket(this, "ZipBucket", {

bucketName: "zip-bucket-07071005",

removalPolicy: cdk.RemovalPolicy.DESTROY,

autoDeleteObjects: true,

});

const unzipBucket = new s3.Bucket(this, "UnzipBucket", {

bucketName: "unzip-bucket-07071005",

removalPolicy: cdk.RemovalPolicy.DESTROY,

autoDeleteObjects: true,

});Creation of Lambda Function

We would be using Node18 runtime environment and we'll be giving necessary permissions to this lambda function. This lambda function may need read access to the source s3 bucket and write permission to the target s3 bucket so that the lambda function can unzip files and write to the target bucket.

And, we would need to trigger the lambda whenever a zip file is uploaded to the source s3 bucket

const unzipBucket = new s3.Bucket(this, "UnzipBucket", {

bucketName: "unzip-bucket-07071005",

removalPolicy: cdk.RemovalPolicy.DESTROY,

autoDeleteObjects: true,

});

const nodeJsFunctionProps: NodejsFunctionProps = {

runtime: Runtime.NODEJS_18_X,

timeout: Duration.minutes(3), // Default is 3 seconds

memorySize: 256,

};

const unzipFn = new NodejsFunction(this, "unzipFn", {

entry: path.join(__dirname, "../src/lambdas", "unzip.ts"),

...nodeJsFunctionProps,

functionName: "unzipFn",

environment: {

TARGET_BUCKET: unzipBucket.bucketName,

},

});

unzipBucket.grantWrite(unzipFn);

zipBucket.grantRead(unzipFn);

unzipFn.addEventSource(

new S3EventSource(zipBucket, {

events: [s3.EventType.OBJECT_CREATED],

})

);Lambda function source code

The actual unzipping happens in this lambda function. We're going to use decompress npm package to unzip the files. This decompress package requires a file system path to write to. So, we're going to give a path /tmp/unzipped for storing the unzipped files. Then, finally we're going to upload the files to the target s3 bucket from the local file system.

import { S3Event } from "aws-lambda";

import decompress from "decompress";

import * as fs from "fs";

import * as path from "path";

import {

S3Client,

GetObjectCommand,

GetObjectCommandInput,

PutObjectCommand,

PutObjectCommandInput,

} from "@aws-sdk/client-s3";

import { Readable } from "stream";

export const handler = async (

event: S3Event,

context: any = {}

): Promise<any> => {

const region = process.env.AWS_REGION || "us-east-1";

const targetBucketName = process.env.TARGET_BUCKET || "";

const client = new S3Client({ region });

for (const record of event.Records) {

try {

const bucketName = record?.s3?.bucket?.name || "";

const objectKey = record?.s3?.object?.key || "";

//get object from s3

const params: GetObjectCommandInput = {

Bucket: bucketName,

Key: objectKey,

};

const localFilePath = path.join("/tmp", objectKey);

const localFileStream = fs.createWriteStream(localFilePath);

const command = new GetObjectCommand(params);

const response = await client.send(command);

let readableStream: Readable = response.Body as Readable;

readableStream.pipe(localFileStream);

//unzip file

const files = await decompress(localFilePath, "/tmp/unzipped");

for (const file of files) {

const filePath = file.path.replace("/tmp/unzipped", "");

console.log("targetBucketName:", targetBucketName);

console.log("filePath", filePath);

console.log("file.data", file.data);

const putObjectParams: PutObjectCommandInput = {

Bucket: targetBucketName,

Key: filePath,

Body: file.data,

};

const response = await client.send(

new PutObjectCommand(putObjectParams)

);

console.log("response", response);

}

} catch (err: any) {

console.log(err);

}

}

};

Please let me know your thoughts in the comments.