Using Elastic File System (EFS) with AWS Lambda

In this tutorial, we're going to learn about how to use Elastic File System (EFS) with AWS Lambda using Typescript. Python version for this article is available here.

We'll be using AWS CDK in this guide. It's an open source software development framework that lets you define cloud infrastructure. AWS CDK supports many languages including TypeScript, Python, C#, Java and others. We're going to use TypeScript in this tutorial.

When deploying (using the cdk deploy command), your code is converted to Cloudformation templates, and all the corresponding AWS resources are created. Only basic knowledge of CDK and TypeScript is required for trying this tutorial. Of course, you need to have an AWS account to create AWS resources.

You can learn more about AWS CDK from a beginner's guide here.

Introduction to AWS Lambda and EFS

AWS Lambda is a serverless, event-driven compute service which lets you run your code without having to provision servers.

AWS Elastic File System(EFS) is a file system which automatically grows and shrinks as you add and remove files. Technically, EFS is an implementation of Network File System.

Why would you use Elastic File System (EFS) with AWS Lambda

Let's consider a scenario where you're building serverless video processing application. In this application, you might have to write some large files and you've to share these large files between lambda functions. Some lambda functions will be writing files and other lambda functions will be reading the same files for further processing or to store metadata in database.

You may think of writing to /tmp folder and it has decent storage space of 10GB.

Recently Lambda has increased the size of ephemeral storage of /tmp folder to 10GB (earlier it was just 512 MB).

Please note that /tmp is a ephemeral storage - meaning that you may lose your data between different lambda function invocations and you'll not be able to share the data stored here across different lambda function invocations.

In these cases, you can use EFS which is a serverless , elastic file system which grows and shrinks as you add or remove files.

Elastic File System would also be a better fit, if you want durable file system which may be used by other services in your application ecosystem.

How Elastic File System (EFS) works with Lambda

When Elastic File System is created, root directory / and root user (UID 0) with read, write and execute permissions will be available, by default.

Of course, you can create multiple directories in EFS later, as you wish.

Access point & Mount Path

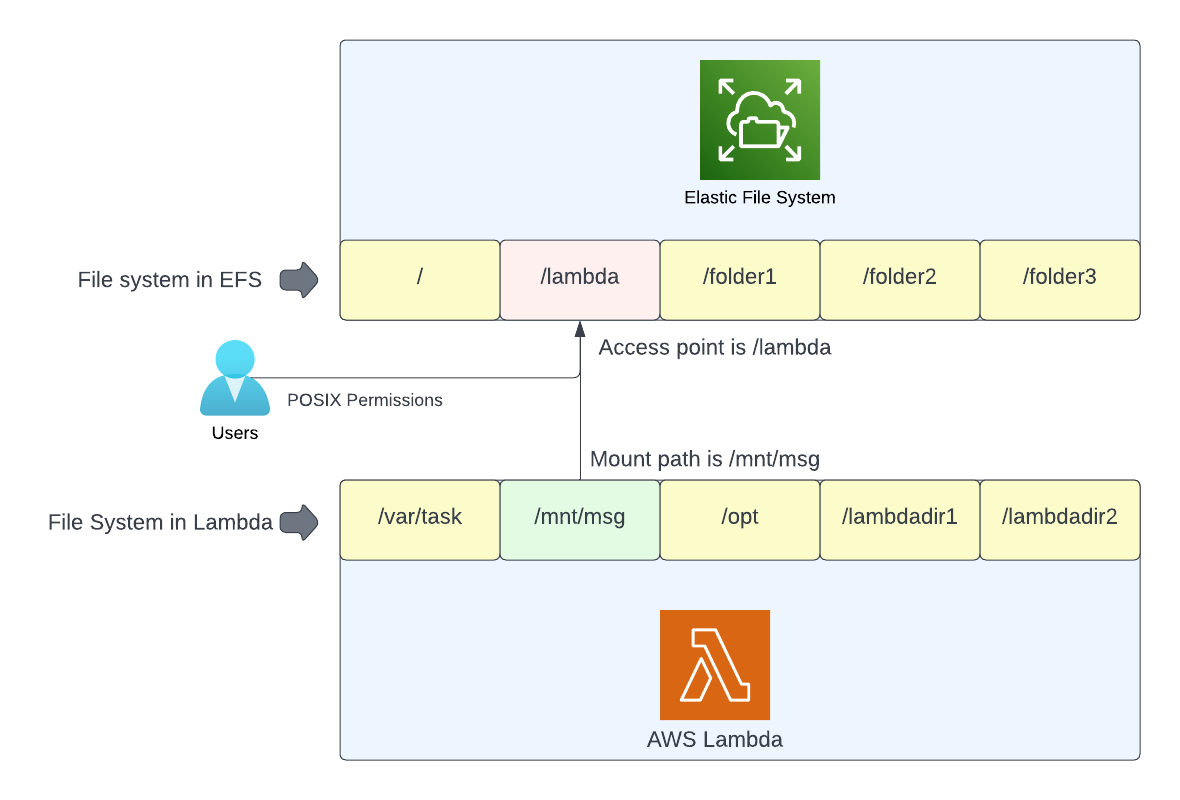

Access point is application specific entry point to the elastic file system. It is a directory at elastic file system for which client(lambda in our case) has access to. For example, you can have a lambda function which writes some files to EFS and you want this lambda to be restricted only to reading/writing to this specific directory. You don't want lambda to have any access (read, write or execute permissions) to any other directory in EFS.

Mount path is the directory at the client end (lambda in our case) using which you can use the access point at the EFS end.

Below is the pictorial representation of the same.

POSIX permissions for access point

In addition to the path, access point also defines the user who has access to the defined path. You can specify the user id, group id and access permissions in standard octal format.

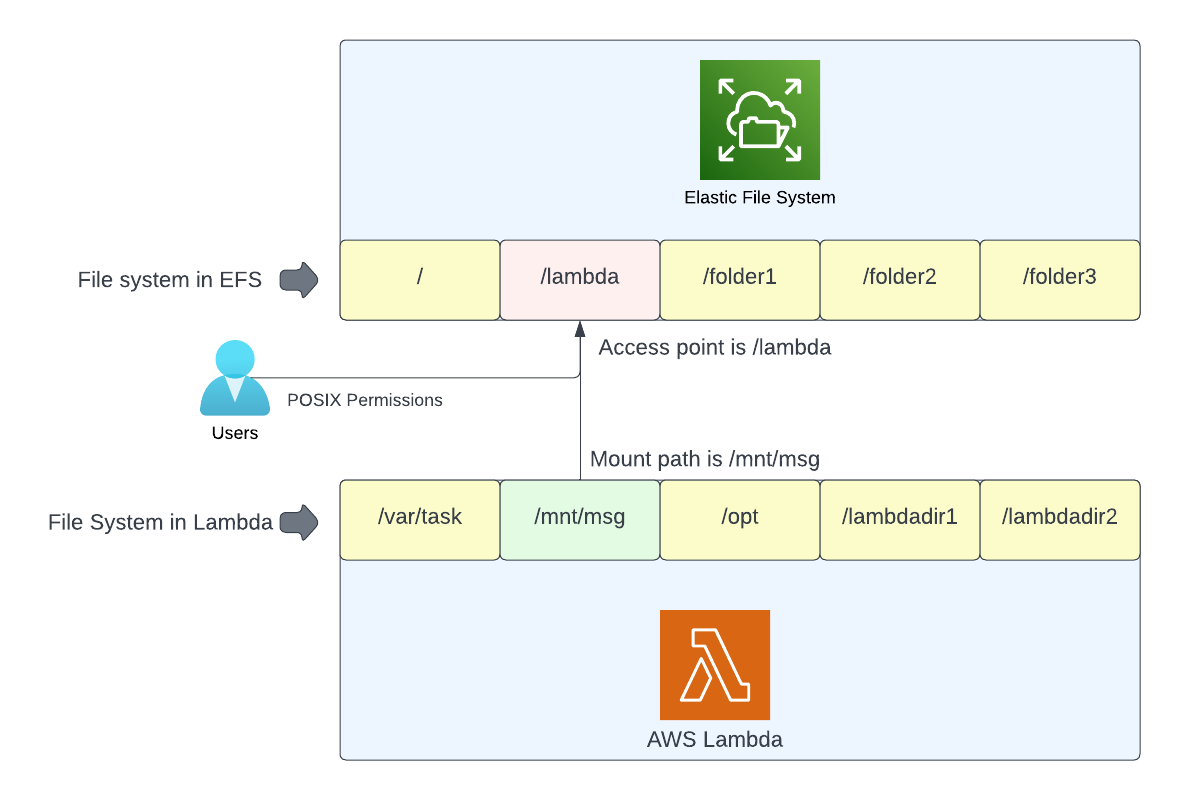

Security Groups

To access EFS from Lambda(or EC2 or any client for that matter), the inbound port 2049 should be opened. This can be done through security group for EFS. If you're using aws cdk as it is mentioned in this article, AWS CDK would create this security group for EFS for you. You just need to approve - when you deploy the stack, as shown in the below screenshot

Project Architecture

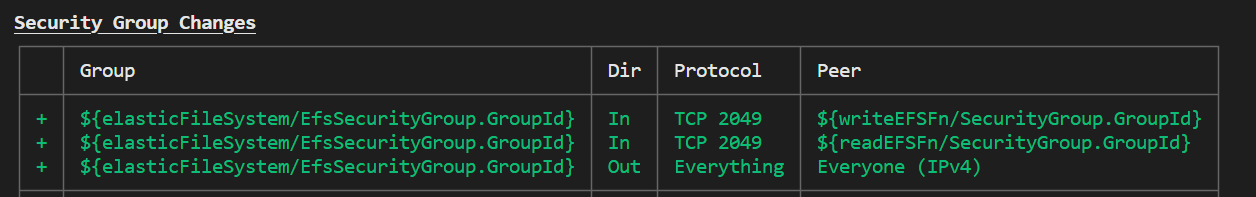

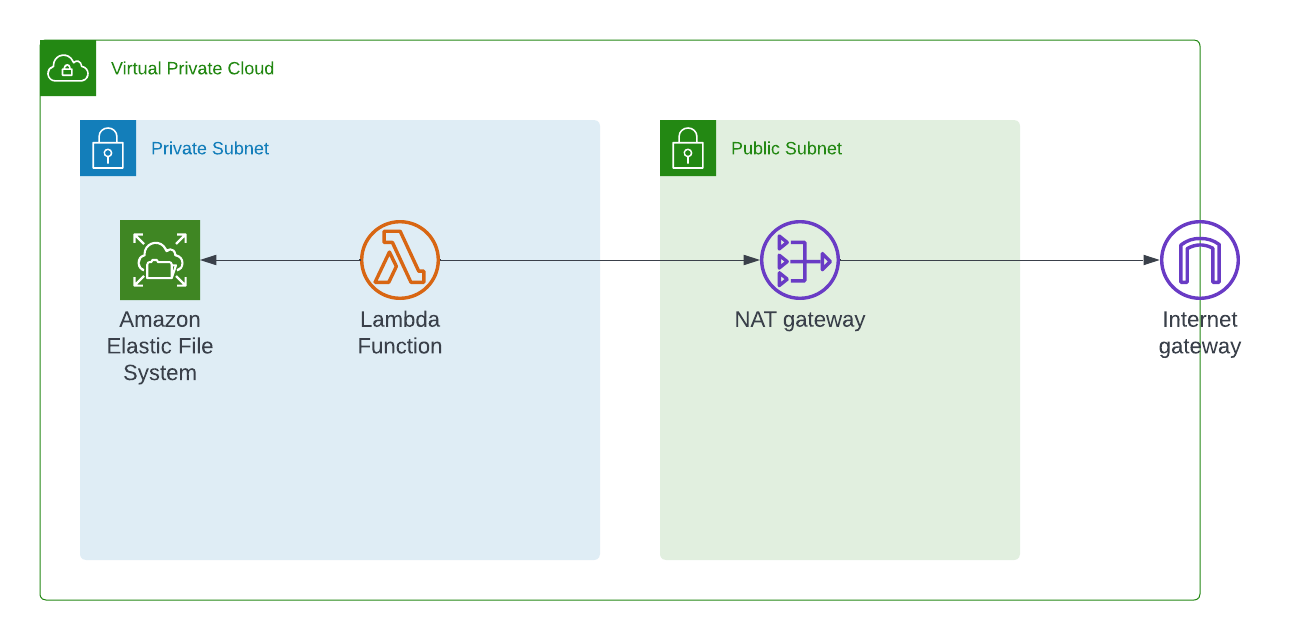

As a requirement, Lambda functions which uses Elastic File System should be in VPC. Because of security reasons - we want the lambda functions and elastic file system to be in private subnet of VPC so that no one external to VPC can connect to these services directly.

Below is the architecture for this project

How to Create a Virtual Private Cloud to Host our Lambda and EFS

We're going to create 2 subnets – a private subnet and a public subnet. In the private subnet, we're going to have lambda and EFS.

const vpc = new ec2.Vpc(this, 'VpcLambda', {

maxAzs: 2,

subnetConfiguration: [

{

cidrMask: 24,

name: 'privatelambda',

subnetType: ec2.SubnetType.PRIVATE_WITH_EGRESS,

},

{

cidrMask: 24,

name: 'public',

subnetType: ec2.SubnetType.PUBLIC,

},

],

});

When you create a subnet of type PRIVATE_WITH_EGRESS in AWS CDK, it will also create a NAT Gateway and will place that NAT Gateway in the public subnet.

The purpose of the NAT Gateway is to allow only the outbound connections from your private subnet to the internet. No one can initiate connections to your private subnet from the public internet.

Note about using NAT Gateway

You don't need to create NAT Gateway for the lambda to talk to EFS as both are in same private subnet in a VPC. But most of the lambda functions would talk to external services and you may need internet connectivity for talking to those external services.

If you don't need any internet connectivity, you can avoid using NAT Gateway and use PRIVATE_ISOLATED subnet type. This will not create NAT Gateway.

How to Create Elastic File System to Store Our Files

There are 2 kinds of performance modes available in Elastic File System - General Purpose and Max I/O mode . The difference between these 2 modes is how many input/output (IO) operations per second it supports. General Purpose supports up to 35,000 IOPS where Max I/O mode supports 500,000+ IOPS.

For most of the cases, General Purpose mode suits better - which we're using it here. And, since this is just a tutorial - I've set removal policy to DESTROY as I want this EFS to be deleted when we finally destroy the stack using cdk destroy command.

For production systems, you should NOT use this as you may want the files to be durable.

const elasticFileSystem = new efs.FileSystem(this, 'elasticFileSystem', {

vpc,

fileSystemName: 'LambdaVPC',

performanceMode: efs.PerformanceMode.GENERAL_PURPOSE, // default

removalPolicy: RemovalPolicy.DESTROY, // used only for development

});What is access point in EFS and how to create one

As mentioned earlier, access point is the application specific entry point for EFS. When you create an Elastic File System(EFS), you can have many directories in that file system. However, you want your client application (lambda - in our case) to access only specific path in that elastic file system. Access point defines that path and the associated permissions for the same.

const accesspoint = elasticFileSystem.addAccessPoint(

'accesspointForLambda',

{

path: '/lambda',

createAcl: {

ownerUid: '1000',

ownerGid: '1000',

permissions: '750',

},

posixUser: {

uid: '1000',

gid: '1000',

},

}

);In above code snippet, we're creating user id 1000 with group id as 1000 to the path /lambda in EFS.

Role, Permissions required for AWS Lambda to access EFS

We create inline policy for allowing to mount, read and write to the elastic file system. Then, we use that inline policy to the lambda role.

const efsAccess = new iam.PolicyDocument({

statements: [

new iam.PolicyStatement({

resources: [elasticFileSystem.fileSystemArn],

actions: [

'elasticfilesystem:ClientMount',

'elasticfilesystem:ClientWrite',

'elasticfilesystem:ClientRead',

],

effect: iam.Effect.ALLOW,

}),

],

});

const role = new iam.Role(this, 'lambdaRole', {

assumedBy: new iam.ServicePrincipal('lambda.amazonaws.com'),

managedPolicies: [

iam.ManagedPolicy.fromAwsManagedPolicyName(

'service-role/AWSLambdaBasicExecutionRole'

),

iam.ManagedPolicy.fromAwsManagedPolicyName(

'service-role/AWSLambdaVPCAccessExecutionRole'

),

],

inlinePolicies: {

efsAccess,

},

});

If you're going to use Lambda in VPC, you need to have AWSLambdaVPCAccessExecutionRole role attached to your lambda.

Lambda functions

Common Lambda function props:

Below is the common lambda function properties which would be used across both of our lambda function - write a file to EFS and read the file from EFS. We're setting timeout to be 3 minutes and memory to 256 MB.

const nodeJsFunctionProps: NodejsFunctionProps = {

bundling: {

externalModules: [

'aws-sdk', // Use the 'aws-sdk' available in the Lambda runtime

],

},

runtime: Runtime.NODEJS_16_X,

timeout: Duration.minutes(3), // Default is 3 seconds

memorySize: 256,

};Lambda function to write to EFS

We want the lambda function which writes file to EFS to in VPC. Please note that the value for the fileSystem property is created from access point of EFS and the mount path is set /mnt/msg meaning that any file written to /mnt/msg will be written to EFS.

const writeEFSFn = new NodejsFunction(this, 'writeEFSFn', {

entry: path.join(__dirname, '../src/lambdas', 'write-efs.ts'),

...nodeJsFunctionProps,

functionName: 'writeEFSFn',

vpc,

vpcSubnets: vpc.selectSubnets({

subnetType: ec2.SubnetType.PRIVATE_EGRESS,

}),

role,

filesystem: lambda.FileSystem.fromEfsAccessPoint(accesspoint, '/mnt/msg'),

});Lambda function code to write to EFS

Lambda function code to write to EFS is pretty simple. Just define the file path (which should be part of your mount path) and write the file.

export const handler = async (

event: any = {},

context: any = {}

): Promise<any> => {

const filePath = `/mnt/msg/content.txt`;

await fsPromises.writeFile(

filePath,

'Simulation of writing some large file to EFS'

);

};

Lambda function properties to read the file from EFS

As we've done for write lambda, we need to define the fileSystem property to define the mount path and associate with access point of EFS.

const readEFSFn = new NodejsFunction(this, 'readEFSFn', {

entry: path.join(__dirname, '../src/lambdas', 'read-efs.ts'),

...nodeJsFunctionProps,

functionName: 'readEFSFn',

vpc,

vpcSubnets: vpc.selectSubnets({

subnetType: ec2.SubnetType.PRIVATE_ISOLATED,

}),

role,

filesystem: lambda.FileSystem.fromEfsAccessPoint(accesspoint, '/mnt/msg'),

});Lambda function code to read from EFS file

Just define the file path and read the file as you would normally.

export const handler = async (

event: any = {},

context: any = {}

): Promise<any> => {

const filePath = `/mnt/msg/content.txt`;

const fileContents = await fsPromises.readFile(filePath);

console.log('fileContents:', fileContents.toString());

};You can test this EFS functionality by executing the Write Lambda first and then execute the Read Lambda function. You'll be able to see the file contents in your cloudwatch logs.

Troubleshooting

Permission denied error:

You may get permission denied when trying to access EFS from lambda. The error message could be EACCES: permission denied

If you get any permission error, it means that the user id and group id that you've used in access point does not have necessary permissions to the sepcified path in EFS.

It is not recommended to have access point as / (root directory) of EFS and it may open complete EFS to lambda. If , for any reason, you want to have access point as root directory( / ) , you need to update user id and group id as zero(0) - the root id. If you use any other id, you may get EACCES: permission denied

Another way is to create a EC2 instance and mount on it and configure necessary permissions. If you want to use EFS only with respect to lambda, you don't need to do that. You can just define the access with necessary permissions (as explained in this tutorial) and you'll be good.

Disadvantages of using EFS

EFS can only be used with Linux systems. If you've any EC2 instances running Windows which you want to connect to EFS - you'll not be able to do so.

Conclusion

Hope you've learnt a bit how to use AWS lambda with Elastic file system. Please let me know your thoughts in comments.