Setting up a CI/CD Pipeline for AWS Fargate using AWS CodePipeline

In this guide, you're going to learn how to create infrastructure to host your dockerized application in AWS Fargate using AWS CodePipeline. Then, we're going to learn about how to setup CI/CD pipeline - so that when you push changes to your application - AWS CodePipeline CI/CD process will kick in, builds your image, and push the image to ECR and then, your fargate tasks will be updated with the latest image that has pushed to ECR.

Infrastructure for AWS Fargate:

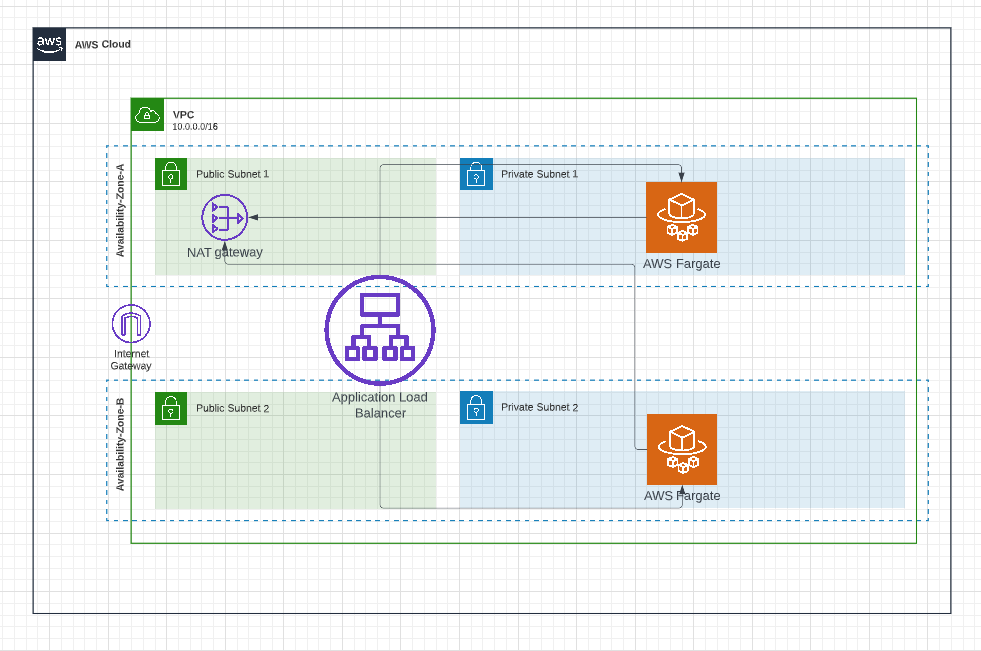

Below is the high-level architecture diagram for hosting our dockerized application in AWS Fargate.

VPC & Subnets:

It is always recommended to have a separate VPC to host your application so that you can have a logical separation between your application and your other AWS resources and this makes it easier to manage. We're going to create a new VPC and we're going to use 2 availability zones to provide redundancy for your application. We're going to create 2 subnets (public subnet and private subnet) in each of these 2 availability zones. So, we're going to create 4 subnets across 2 availability zones.

Application load balancer & Fargate tasks:

We're going to host our fargate application in private subnets so that no one would be able to directly access our application. This provides additional security to our application. Then, we're going to create application load balancer , and this application load balancer will reside in public subnets and the role of this application load balancer is to route requests from users to our fargate tasks in private subnets.

Nat Gateway:

As mentioned earlier, our fargate app is hosted in private subnets. Private subnets are subnets without any internet connectivity and this poses a problem. When you don't have internet connectivity, how we're going to pull the docker image and additional packages that are required for our application. NAT Gateway solves this problem. From our private subnet, using NAT gateway, you can access the internet from the private subnet for outbound traffic and still, all inbound traffic will be blocked.

Let us create this architecture using AWS CDK - an Infrastructure as Code (IaC) tool - developed and maintained by AWS. If you're new to AWS CDK - I strongly suggest you read this guide to understand AWS CDK. No problem, I can wait.

You can execute the below command to create AWS CDK app

mkdir aws-cdk-fargate

cd aws-cdk-fargate

cdk init app --language=typescript

CDK app would have created a stack file in lib folder.

VPC creation:

Now, we're going to create a VPC resource in this stack.

const vpc = new ec2.Vpc(this, "FargateNodeJsVpc", {

maxAzs: 2,

natGateways: 1,

subnetConfiguration: [

{

cidrMask: 24,

name: "ingress",

subnetType: ec2.SubnetType.PUBLIC,

},

{

cidrMask: 24,

name: "application",

subnetType: ec2.SubnetType.PRIVATE_WITH_NAT,

},

],

});

The above construct will create a VPC with 2 availability zones and one NAT Gateway . In subnet configuration, we're asking CDK to create two types of subnets - public and private. Please note that this will automatically create Internet Gateway as the public subnet requires it. The private subnet will be attached to NAT Gateway as we've mentioned the subnet type as PRIVATE_WITH_NAT

This construct will create 4 subnets - 2 subnets in each of the availability zones and update the route table to route traffic accordingly.

Application load balancer creation:

We can create Application Load Balancer using the below construct. The application load balancer is to be associated with public subnets.

const loadbalancer = new ApplicationLoadBalancer(this, "lb", {

vpc,

internetFacing: true,

vpcSubnets: vpc.selectSubnets({

subnetType: ec2.SubnetType.PUBLIC,

}),

});

Cluster creation:

You can use the below construct code to create the Fargate cluster. We're associating this cluster with the VPC that we created earlier.

const cluster = new ecs.Cluster(this, "Cluster", {

vpc,

clusterName: "fargate-node-cluster",

});

Execution role:

The ECS agent should be able to pull images from ECR to update your tasks. This permission is represented by execution role . We're going to create this execution role with a predefined managed policy AmazonECSTaskExecutionRolePolicy which would be used by fargate to pull the image or to log to cloudwatch.

const executionRole = new iam.Role(this, "ExecutionRole", {

assumedBy: new iam.ServicePrincipal("ecs-tasks.amazonaws.com"),

managedPolicies: [

ManagedPolicy.fromAwsManagedPolicyName(

"service-role/AmazonECSTaskExecutionRolePolicy"

),

],

});

Creating ECR Repository:

You need to create an ECR repository to store images. An ECR repository is created using the below construct.

const repo = new ecr.Repository(this, "Repo", {

repositoryName: "fargate-nodejs-app",

});

Please note that this ECR repository would be used in our CI/CD pipeline to push our images

Creating Fargate service:

We're going to use ecs_patterns.ApplicationLoadBalancedFargateService construct to create Fargate service - as shown below

new ecs_patterns.ApplicationLoadBalancedFargateService(

this,

"FargateNodeService",

{

cluster,

taskImageOptions: {

image: ecs.ContainerImage.fromRegistry("amazon/amazon-ecs-sample"),

containerName: "nodejs-app-container",

family: "fargate-node-task-defn",

containerPort: 80,

executionRole,

},

cpu: 256,

memoryLimitMiB: 512,

desiredCount: 2,

serviceName: "fargate-node-service",

taskSubnets: vpc.selectSubnets({

subnetType: ec2.SubnetType.PRIVATE_WITH_NAT,

}),

loadBalancer: loadbalancer,

}

);

cluster: This property tells AWS to use the specified cluster in which fargate service has to be created

taskImageOptions : The properties specified here would be used to create task definition for your fargate tasks.

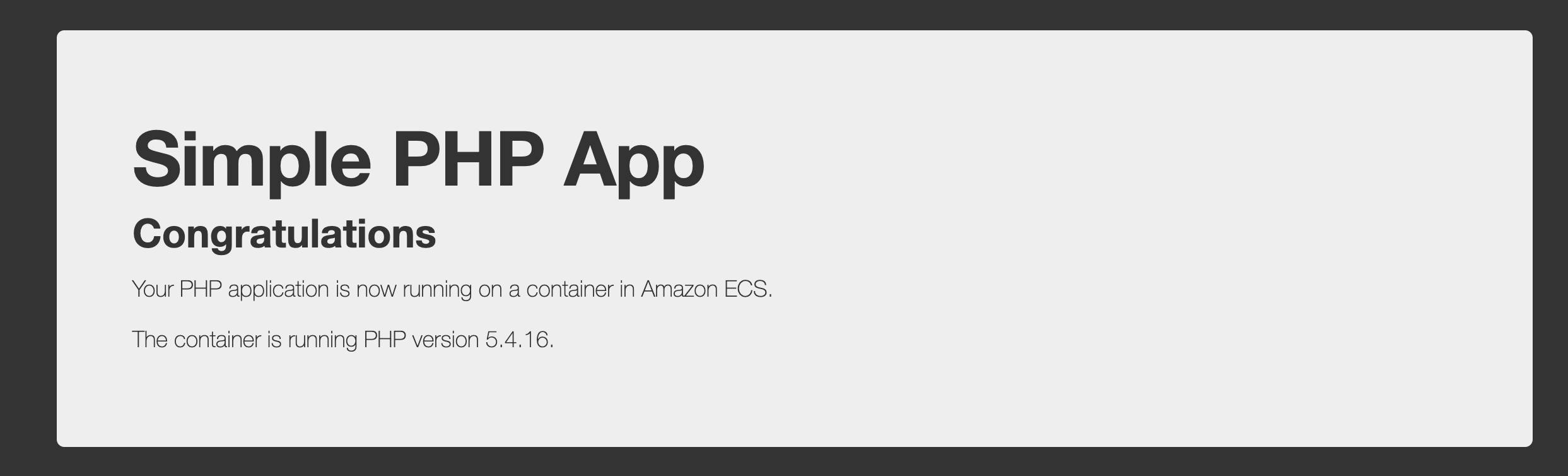

image: This is the image that would be used to create container in fargate. Please note that we're not using our application image. Instead, we're using amazon/amazon-ecs-sample provided by AWS - This is a simple dockerized PHP application. To create and run fargate service, we need an image. Later, in CI/CD pipeline, we'll be overwriting this image with our custom application image.

family : This property represents name of the task definition. Any further updates to the task definition will increment the revision number associated with the task definition with the same family value. This family property would be used in our CI/CD pipeline to update the image.

containerPort : This is the port exposed by container. As we're going to build a simple web application with HTTP, we're using port 80

executionRole: This is the role required by ECS agent which is used for pulling images from ECR and to log to cloudwatch

cpu: This value represents how much CPU you need for the task. As I'm going to create simple API with couple of endpoints, I've used the value 256 which represents .25 vCPU. You can increase the value as per your computational needs.

memoryLimitMib: This value represents how much memory you need for task. I've used 512.

desiredCount: This is the property which dictates how many tasks that you want to run as part of this service. I want to create 2 tasks just to have redundancy - each task would be created in private subnet of each availability zone. If you're expecting huge usage for your application, you may have to increase this value.

taskSubnets : This property tell AWS ECS fargate to launch in which subnets. For security reasons, we're going to use private subnets of our VPC created earlier.

loadBalancer : Represents the load balancer that would be used in our service.

Here is the complete CDK code for creating fargate service with application load balancer

export class AwsCdkFargateStack extends Stack {

constructor(scope: Construct, id: string, props?: StackProps) {

super(scope, id, props);

const vpc = new ec2.Vpc(this, "FargateNodeJsVpc", {

maxAzs: 2,

natGateways: 1,

subnetConfiguration: [

{

cidrMask: 24,

name: "ingress",

subnetType: ec2.SubnetType.PUBLIC,

},

{

cidrMask: 24,

name: "application",

subnetType: ec2.SubnetType.PRIVATE_WITH_NAT,

},

],

});

const loadbalancer = new ApplicationLoadBalancer(this, "lb", {

vpc,

internetFacing: true,

vpcSubnets: vpc.selectSubnets({

subnetType: ec2.SubnetType.PUBLIC,

}),

});

const cluster = new ecs.Cluster(this, "Cluster", {

vpc,

clusterName: "fargate-node-cluster",

});

const repo = new ecr.Repository(this, "Repo", {

repositoryName: "fargate-nodejs-app",

});

const executionRole = new iam.Role(this, "ExecutionRole", {

assumedBy: new iam.ServicePrincipal("ecs-tasks.amazonaws.com"),

managedPolicies: [

ManagedPolicy.fromAwsManagedPolicyName(

"service-role/AmazonECSTaskExecutionRolePolicy"

),

],

});

new ecs_patterns.ApplicationLoadBalancedFargateService(

this,

"FargateNodeService",

{

cluster,

taskImageOptions: {

image: ecs.ContainerImage.fromRegistry("amazon/amazon-ecs-sample"),

containerName: "nodejs-app-container",

family: "fargate-node-task-defn",

containerPort: 80,

executionRole,

},

cpu: 256,

memoryLimitMiB: 512,

desiredCount: 2,

serviceName: "fargate-node-service",

taskSubnets: vpc.selectSubnets({

subnetType: ec2.SubnetType.PRIVATE_WITH_NAT,

}),

loadBalancer: loadbalancer,

}

);

}

}

When you run cdk deploy to deploy the stack, all AWS resources would be created and the URL for application load balancer would be printed in the console. When you access the URL, you'll be able to see following page

Please note that this is just a sample app. In the next step, we're going to deploy our nodejs app.

CI/CD Pipeline:

If you're new to AWS CodePipeline CI/CD, I strongly recommend you read this guide on AWS CodePipeline. I can wait. Please read that guide first. :-)

Before creating CI/CD pipeline, we're going to create a simple NodeJS app using express.

Simple NodeJS API

const express = require('express');

const app = express();

const PORT = process.env.PORT || 80;

const products = [

{id: 1, name: "Product 1", price: 100},

{id: 2, name: "Product 2", price: 200},

{id: 3, name: "Product 3", price: 300},

];

app.get("/health", (req,res ) => {

res.status(200).send({data: "OK"});

});

app.get("/", (req,res ) => {

res.status(200).send({data: products});

});

app.listen( PORT, () => {

console.log(`Listening at port:${PORT}`)

});

As the objective of this guide is to deploy our nodejs application into ECS service with launch type as fargate - I've made this application to be pretty simple. It just has couple of endpoints - / endpoint to return list of products and /health endpoint to return health status of this API.

AWS ECS service requires an image - so we need to create Dockerfile for creating an image out of this nodejs application.

Dockerfile for NodeJS API:

FROM node:16-alpine

WORKDIR /usr/src/app

COPY package*.json ./

RUN npm install

COPY . .

EXPOSE 80

CMD [ "node", "app.js" ]

I've made this Dockerfile simple as our focus is on building CI/CD pipeline for our ECS service.

Below are the high-level steps that we need to follow in our AWS CodePipeline CI/CD to deploy dockerized nodejs application into AWS ECS service(with Fargate as launch type)

AWS CodePipeline CI/CD to deploy to AWS Fargate:

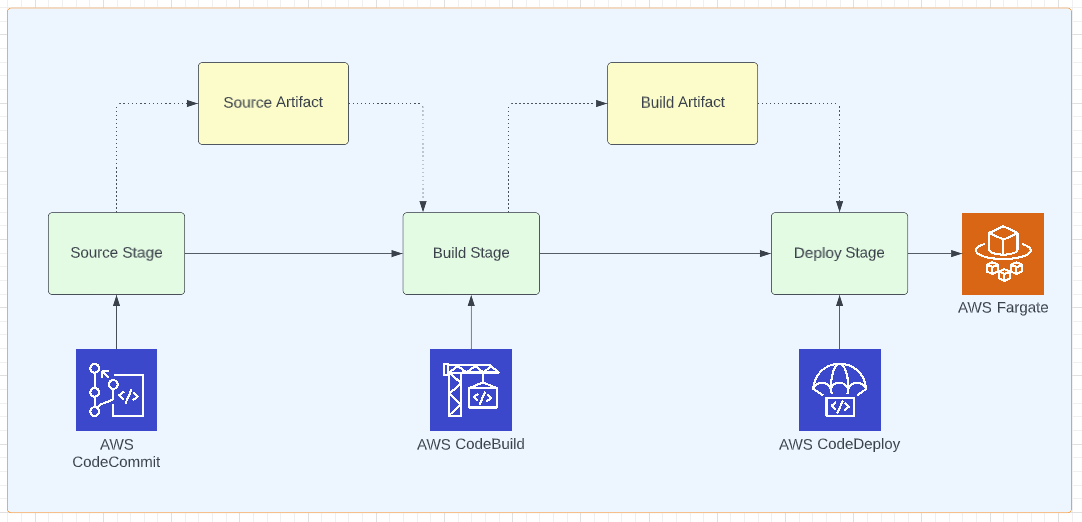

Below is the pictorial representation of our CI/CD Pipeline.

We're using AWS CodeCommit as a source repository, AWS CodeBuild for building the application, and AWS CodeDeploy to deploy to ECS. And, AWS CodePipeline will be orchestrating between these services to build CI/CD Pipeline.

Source Stage:

In the source stage, you can either create a new CodeCommit repository or you can refer to the existing CodeCommit repository. As I want this repository lifecycle (creation, deletion, etc.. ) to be independent of CI/CD pipeline, I'm referring to the existing CodeCommit repository. Another reason is that the creation of a code repository would be done by the development team whereas the creation of the CI/CD pipeline would be done by devops team. Based on the culture and size of the company(Startups, for example) - I've seen both steps are carried out by the development team.

In the below code snippet, we're creating CodeCommitSourceAction and source artifact and referring to the existing AWS CodeCommit repository.

const repo = codecommit.Repository.fromRepositoryName(

this,

"codecommit-repo",

ecrRepository

);

const sourceOutput = new codepipeline.Artifact("SourceArtifact");

const sourceStageAction = new cpactions.CodeCommitSourceAction({

actionName: "source",

repository: repo,

branch: branchName || "master",

output: sourceOutput,

trigger: cpactions.CodeCommitTrigger.EVENTS,

});CodeCommitSourceAction: There are different source actions available in AWS CodePipeline. We're using CodeCommitSourceAction as our source is AWS CodeCommit repository. This action accepts the following parameters in the constructor

actionName: This is the name of the action - you can name whatever you want

repository: Reference to the repository in which this action has to be performed. We're referencing the AWS CodeCommit repository that we created earlier

branch: Branch name of the repository. We've mentioned master as we want the pipeline to be triggered when there is a push happens in the master branch

output : Where to store the output of this action. We're using the artifact that we created earlier.

trigger: How AWS CodePipeline knows that we've pushed our changes to the repository? By default, it uses AWS CloudWatch events. You don't need to mention this as this is the default behavior. Another option is to poll and get to know about the changes but that option is not recommended.

Build Stage:

The build stage is bit different from other stages as we need to create a Pipeline project. This codebuild pipeline project requires access to ECR as we would be building the image and pushing that image to ECR. So, we're creating a role for the same.

As you know our pipeline will run in docker. As we want to build the docker image inside another docker - we need to run it in privileged mode. You specify the build image and compute type based on your needs. I've specified AMAZON_LINUX_2_3 as build image and compute type as SMALL .

Please note all actions take place in buildspec.yml file. You can mention the path of the buildspec file here in code build project.

And, finally, CodeBuildAction ties all these components (CodeBuild project, input, output artifact) together. Below is the code snippet for build stage.

const buildOutput = new codepipeline.Artifact("DockerBuildArtifact");

const buildRole = new iam.Role(this, "buildRole", {

assumedBy: new iam.ServicePrincipal("codebuild.amazonaws.com"),

managedPolicies: [

iam.ManagedPolicy.fromManagedPolicyArn(

this,

"ECRAccess",

"arn:aws:iam::aws:policy/AmazonEC2ContainerRegistryFullAccess"

),

],

});

const buildProject = new codebuild.PipelineProject(

this,

"CodeBuildProject",

{

projectName: "app-build",

vpc,

subnetSelection: vpc.selectSubnets({

subnetType: ec2.SubnetType.PRIVATE_WITH_NAT,

}),

buildSpec: BuildSpec.fromSourceFilename("./buildspec.yml"),

environment: {

buildImage: codebuild.LinuxBuildImage.AMAZON_LINUX_2_3,

computeType: ComputeType.SMALL,

privileged: true,

},

role: buildRole,

}

);

const codebuildAction = new cpactions.CodeBuildAction({

actionName: "codebuildaction",

project: buildProject,

input: sourceOutput,

runOrder: 1,

environmentVariables: {

ECR_REGISTRY: { value: ecrRegistry },

ECR_REPOSITORY: { value: ecrRepository },

CONTAINER_NAME: { value: containerName },

},

outputs: [buildOutput],

});

Deploy stage

The deploy stage is pretty simple. We just need to mention the service and feed the output artifact of the build stage to the input of the deploy stage.

const deployAction = new cpactions.EcsDeployAction({

actionName: "deploytoecsaction",

service,

input: buildOutput,

});AWS CodePipeline:

AWS CodePipeline ties all these stages together. Please note that in stages property, the order of stages matters. The pipeline will start from source stage to the build stage and to the final deploy stage.

const pipeline = new codepipeline.Pipeline(this, "FargateCodePipeline", {

pipelineName: "fargate-code-pipeline",

crossAccountKeys: false,

stages: [

{

stageName: "Source",

actions: [sourceStageAction],

},

{

stageName: "build",

actions: [codebuildAction],

},

{

stageName: "deploy",

actions: [deployAction],

},

],

});We've constructed this pipeline entirely within AWS CDK.

BuildSpec file:

In the nodejs code repository, we need to add buildspec.yml at the root of your repository and paste the below contents. I'll explain the significance of each section in a bit.

version: 0.2

phases:

install:

runtime-versions:

nodejs: 12

commands:

- npm i npm@latest -g

- npm cache clean --force

- rm -rf node_modules package-lock.json

pre_build:

commands:

- npm install

- aws ecr get-login-password | docker login --username AWS --password-stdin $ECR_REGISTRY

build:

commands:

- docker build -t $ECR_REGISTRY/$ECR_REPOSITORY:latest .

- docker push $ECR_REGISTRY/$ECR_REPOSITORY:latest

post_build:

commands:

- python scripts/create-image-defintion.py imagedefinitions.json $CONTAINER_NAME $ECR_REGISTRY/$ECR_REPOSITORY:latest

artifacts:

files:

- imagedefinitions.jsoninstall phase: In the install phase, we'd like to have nodejs 12 version installed.

pre_build phase: In the pre_build phase, we're logging into ECR. Please note that we've passed the registry as environment variables

build phase: In the build phase, we're building the image and pushing the image to ECR.

post_build phase: In the post_build phase, we're generating a json file with image definitions using a simple python script.

In the artifacts section, the file imagedfinitions.json is mentioned and this file will be used by EcsDeploy action that we've defined in build stage of AWS CodePipeline.

Now, when you push changes to this nodejs app, it will be automatically built and will be deployed to ECS service.

Please let me know if your comments below and if you like guide, please subscribe so that I can update whenever I publish any new guides